I pressed the button: PE exam marking now leads the world

Last week, my team and I struck a button 🔴. That button was a technical one but it meant that machine learning (ML) as part of ExamSimulator became the primary vehicle for identifying correct responses in students’ PE exam answers. Until this point, as students wrote prose-based responses to PE exam questions (including extended writing essays), manually written mark schemes were the primary vehicle of comparison and machine learning was used as a back-up. Now, in September 2022, less than two years after the launch of ExamSimulator, machine learning has been switched to become the primary method of ExamSimulator auto-identifying potentially correct responses.

In other words, academic PE teachers now have the world’s first-ever software capable of interpreting students’ writing in all of its varieties and identifying a huge and increasing range of potentially correct responses.

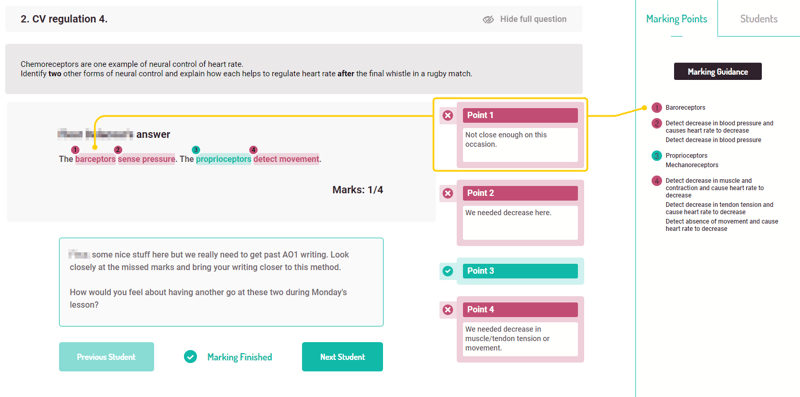

Take a look at the example below. I have sampled 6 different phrases that have been entered into the ML bank for the same question. The question is "Define stroke volume." and is taken from AQA GCSE PE 9-1. In total, this question has over 30 ML entries and you can see 6 of these below. I want to remind you that the phrases that are entered do not need to be matched exactly by a student in the future nor is a mark automatically awarded. A teacher may still mark the phrase as incorrect (perhaps, too vague or not enough) during their marking. Spelling variations which are still phonetically equivalent are accepted and highlighted for the teacher to make a decision. Different words orders are accepted etc... In other words, ML phrases contain over 30 entries which a student needs to get close to in order for their answer to be highlighted.

- The student response is highlighted and ratified by the teacher.

- The score is the number of times that students have written a matching phrase which ML phrases has highlighted for a teacher and the teacher has marked it correct.

- The phrase is agreed by the system and released into the wild.

- The phrase is blocked - it will no longer be highlighted or fed through into the machine learning bank.

Once again, I want to mention that you are viewing a sample of six phrases from a range of tens of phrases for one single question. In other words, our Machine Learning is highly likely to automatically identify the vast majority of potentially correct answers that a student writes.

What is the context?

In January 2021, ExamSimulator was launched by The EverLearner Ltd and features thousands of exam questions, mark schemes and marking guidance across GCSE, A Level, BTEC, Cambridge National and Cambridge Technical PE and Sport courses. One feature of ExamSimulator is a teacher marking and review environment. It is a smart system where teachers can allocate marks and feed back to students on the quality of their responses, which they typically make online (although offline exams are also a feature) . The system also features auto-identification software that highlights potentially correct content for the teacher to make a decision about.

The software is now active in over 750 PE departments and the data flowing through the system is large.

But there is also a human element. Every time a teacher marks a new, unique response from a student as correct, ExamSimulator learns from this new input. We call this Machine Learning (ML). Within this process is a real life, human being PE teacher called James (me) who is reading every new ML rule (although we are moving soon to a more automated algorithm to save James working all hours). These new rules are completely anonymous and I do not know which teacher is marking what student’s work or from which school. This provides me with a unique and objective perspective on how tens of thousands of PE students are answering questions and how thousands of PE teachers are marking their students’ writing. After 20 months of activity, we have over 75,000 pieces of evidence which form the basis of what follows.

What have we found?

Thing to Celebrate

Good news: with support from PE teachers, ExamSimulator is accurately identifying correct responses that their students write. The accuracy of awarding marks for correct responses is excellent. This should not be undervalued, as students would very quickly become disillusioned if their good answers were not deemed worthy.

Students gain the opportunity to write lots of answers on a regular basis. Our data is telling us that exam-style writing is no longer the “sometimes activity” that is stuck on the ends of lessons or is “only for homework”, but has become an integral part of lesson experiences on a frequent basis. I would actually take this further. In numerous centres, students are engaging with ongoing exam writing, meaning that exam writing and review is a completely normal experience of every learning episode and, rather than being an assessment tool, is a highly formative experience for students.

Students are receiving feedback that is highly-quality, rapid and is clearly diagnostic. We know from the writing of Kirschner how important feedback is to the learning process. “No feedback, no learning” should be taken literally and we are proud to report that students are receiving far better and earlier feedback than prior to the launch of ExamSimulator.

Students are receiving feedback that is high quality, rapid and is clearly diagnostic. I am proud to report that students are receiving far better and earlier feedback than prior to the launch of ExamSimulator. Feedback causes learning. Rapid, diagnostic feedback causes outstanding learning.

Furthermore, due to the auto-identification of potentially correct answers, teachers are spending their marking time providing feedback rather than the majority of the time being spent mechanically finding answers and referencing them against a mark scheme. I believe that this point is one of the most fundamental ones to reiterate. Teachers do not-will not-spend less time marking. Rather, teachers are now spending the same marking time performing higher-impacting behaviours such as providing detailed directive and epistemic feedback. I summarise this in the following table:

| "Traditional" exam marking | "Quality" exam marking through ExamSimulator |

| Teacher sets a narrow range of questions from the past. By definition, these questions will never be asked in the future in the “real” exam. | Teacher set a broad range of questions that are exam-board and course-specific and are likely to feature on future exams. |

| Teachers spend the majority of their marking time locating correct answers. | Teachers spend little time locating correct answers. |

| Teachers spend time referencing and annotating answers to the mark scheme points. | Teachers spend no time referencing and annotating answers to the mark scheme points. |

| Teachers spend time tallying points and calculating percentages. | Teachers spend no time tallying points and calculating percentages. |

| Teachers spend time transferring marks into mark books. | Teachers spend no time transferring marks into mark books. |

| Teachers lack the time to provide detailed feedback. | Teachers spend the majority of marking time providing detailed feedback. |

| Teachers lack the time to produce diagnostic reports. | Teachers and students always receive diagnostic report on performance. |

| Teachers have no convenient mechanism to cause future learning in the weaker areas of knowledge or skill. | Teachers have a convenient mechanism to cause future learning in the weaker areas of knowledge or skill. |

Areas for improvement

A very clear trend in our data is that responses that are vague, and in some cases wrong, are sometimes being awarded marks by teachers even if ExamSimulator has not recognised them as correct. In order to avoid this, teachers the following points are important:

It needs to be stressed that, in exam conditions, misspellings can still be credited IF that misspelling is still phonetically equivalent. What I am reporting here is that sometimes teachers mark non-phonetically equivalent answers as correct.

Example: Marking “barceptors'' as correct (for “baroreceptors”). This answer should be marked incorrect specifically because it is not phonetic. In this case, two entire syllables are missing. If the answer had been baroorecptor or barroreceptor or baroreceptar or even barrooreceptar, ExamSimulator would have recognised these and the mark should be awarded but “barceptor” is incorrect.

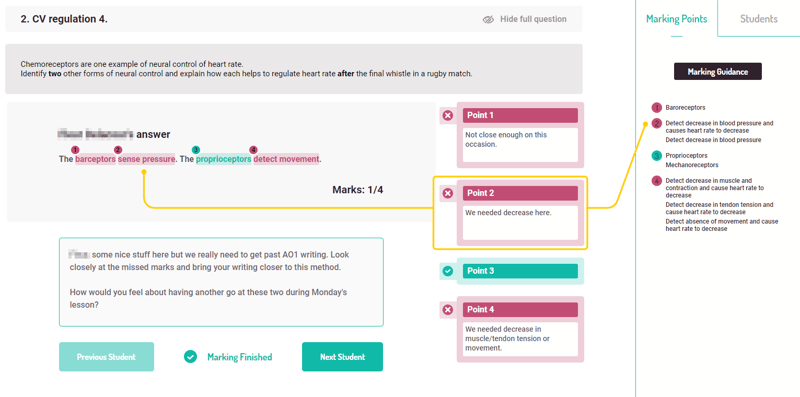

Example: Marking “(baroreceptors) sense pressure” when the marking point states “(baroreceptors) detect decrease in blood pressure and cause heart rate to increase”. Without the word decrease (or equivalent), this point should not be given.

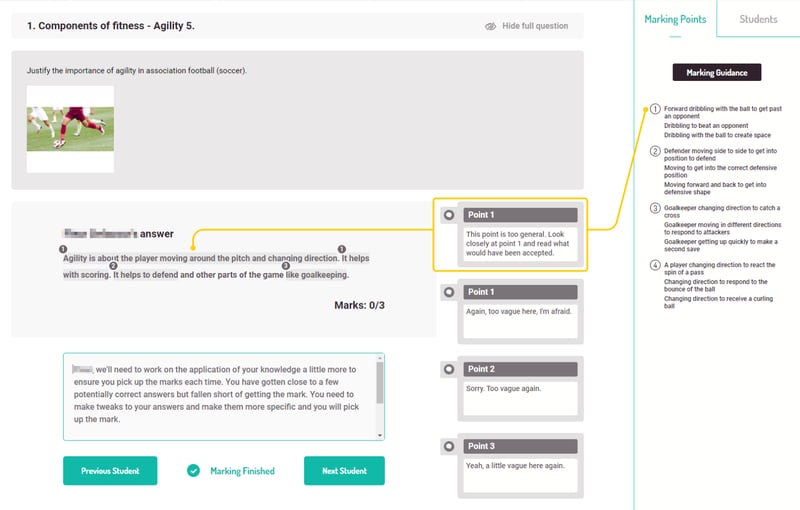

Example: Awarding a mark for “Agility is about the player moving around the pitch and changing direction” when the marking point is “Forward dribbling with the ball to get past an opponent.” We are not suggesting that the student needs to write this idea exactly, but that their answer needs to be detailed enough to get them the mark. I have previously written guidance on application/AO2 writing and have advocated for the EIO methodology. You can read about EIO writing here.

Example: Marking “Fartlek training is a form of interval training” correct, when the marking point states “Fartlek is a form of continuous training that features changes to intensity, incline or terrain”.

So, what does this mean?

ExamSimulator provides access to the broadest range of teacher marking ever.

Whilst we mustn't jump to conclusions too early, we already have a data set of 75k actions from thousands of different teachers. It will be interesting to see if this pattern is similar with 100k and 1M pieces of data. However, it is also fair to say that 75k is a lot and is actually the broadest range of teacher-marking data ever compiled in any subject, in any system in the world. It is for this reason that we wanted to report our initial findings.

“Generous marking” does not encourage learning.

When teachers overwrite ExamSimulator (which, by the way, they are entitled to do, of course) some students are being reassured that their answers will be marked correct when, in the “real thing” the same answers would definitely not attract marks. This causes the student to receive unreliable information about their current performance level. Furthermore, it takes away the opportunity for the student to receive the diagnostic information that ExamSimulator naturally provides.

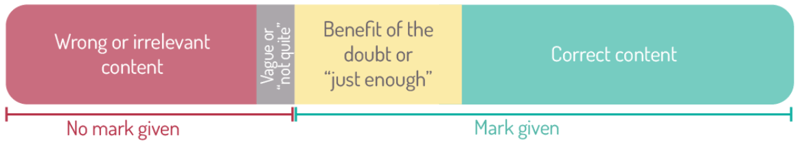

The community sometimes “fills in the gaps”.

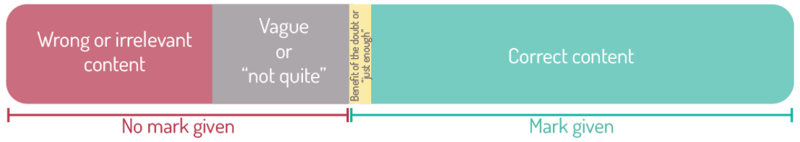

The vast majority of ExamSimulator + teacher marking is hyper-accurate. However, teachers are sometimes “filling in the gaps” for students. What I mean by this is that teachers are sometimes giving too much benefit of the doubt to a response. Teachers might think to themselves “It’s close enough” or “I know what they meant to write” and award a mark even if ExamSimulator has not recognised the answer as correct. We urge teachers to avoid this behaviour, as it provides a false picture to the student. Teachers need to compassionately inform students of when their answers fall short of being correct and in what way they fall short. This is the zone where learning occurs the most and needs to be the focus of teacher marking.

Look closely at this first image. At the moment, this is how teachers are collectively marking student responses in PE exams:

What marking is looking like NOW

Now look at the following image. This is what marking of PE exam answers should like right now:

What marking should look like NOW (based on student accuracy levels)

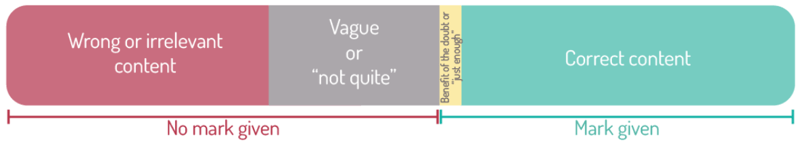

And finally, have a look at this final image. With the support of ExamSimulator, this is what marking is anticipated to look like in 12 months time:

Where we anticipate marking being IN 12 MONTHS TIME:

You will notice that ExamSimulator is directly causing students to answer more questions correctly. In other words, the quality of student writing is increasing.

Where do we go from here?

We urge all PE teachers and the entire community to use ExamSimulator with their students as frequently as they possibly can. The advantages derived are so significant that there will be a notable difference between the performance of students who do prepare for their exams using ExamSimulator and those that don’t. Furthermore, every marking episode enriches ExamSimulator with new learning and a broader understanding of “correct” responses. We firmly believe that this means that during 2023, PE teachers will have access to the world’s first-ever fully automated practice exam software that is capable of marking human prose more accurately than a human teacher on average. I want to pause here and to make this point again. In 2023, PE teachers teaching subjects like GCSE PE, A-level PE, Cambridge Nationals, BTEC Tech, etc. will be the first sector in the world to have fully automated exam software.

Finally, we at The EverLearner are going to continue to monitor data and report it back to you for digestion. We truly believe that this software is rapidly improving student exam and coursework performance across the entire PE theory sector.

Thank you for reading my blog.

%20Text%20(Violet).png)